Interrupts, as their name implies, may interrupt the program flow of a microprocessor at any point, any time, even though sometimes they seem to intentionally do it in the most harmful way possible. The very reason of their existence is to allow the processor to handle events when it is not convenient to be waiting for them to happen or regularly poll for their status, or to handle those types of events requiring a fast and low latency attention.

When carefully utilized, interrupts are a powerful ally, we can even build multiple-task schemes assigning an independent periodic interrupt to each task.

When haphazardly used, interrupts may bring more problems than solutions; in particular, we can’t share variables or subroutines without taking due precautionary measures regarding accessibility, atomicity, and reentrability.

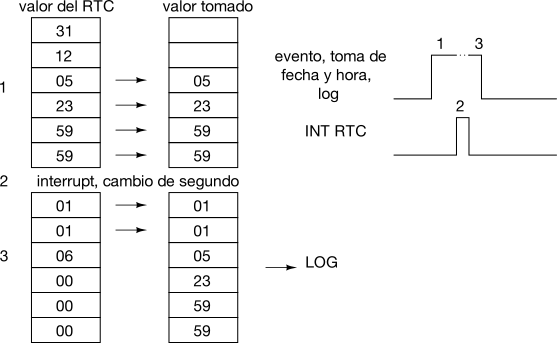

An interrupt may occur at any point in time, and if it occurs in the middle of a multi-byte variable update it can (and in fact it does) wreak havoc on other tasks. These may hold “partially altered” values that in the real world are incorrect values, and probably dangerously incoherent for the software. A typical and frequently forgotten example are timing or date and time variables. The main program happily takes these variables byte by byte in order to show them on the display screen, without thinking that, if they are updated by an interrupt, these are not restricted to being handled before or after our action and not in the middle of it. If those values are only shown at the display screen there is no further inconvenience, correct values will be shown one second later, but if they are logged or reported, we’ll be in serious trouble explaining why there is a record with a date almost a year off, as in the following example:

Some time ago there was this microcontroller-oriented developing environment that in order to “solve this inconvenience” introduced the concept of ‘shared variables’. Every time one of these variables was accessed or changed, the compiler introduced a set of instructions to disable the interrupts during this action and enable them back at the end. The problem I find on these strategies is that this is very bad educationally speaking, as bad as protection diodes in GPIOs (commented in this article): the user does not understand what is going on, does not know, doesn’t learn. Furthermore, frequent accesses to these variables produce frequent interrupt disables, which translate to latency jitter in interrupt handling.

Even though in the case of a multi-byte variable as the one in the example this is quite evident, the same happens with a 32- or 16-bit variable on 8-bit micros that do not have proper instructions to handle 32-bit (most of them) or 16-bit entities. Atomicity, that is, the ability to access the variable as an indivisible unit, is lost, and when accesses to these variables are shared by asynchronous tasks, there is the possibility of superimposing those accesses and then ending with something like what we’ve seen in the example above.

If our C development environment has signal.h, then we should find in there a definition for the sig_atomic_t type, that identifies a variable size that can be accessed atomically. Otherwise, and as a recommendation in these environments, the user must know the processor being used and act accordingly.

In multiprocessing environments there are other issues, that we will not list here.

Corollary

An interrupt may occur at any point in time, as long as it is enabled. The probability of this happening just in the very moment we are accessing a multi-byte variable in a non-atomic way is pretty low, particularly if it is a brief, non frequent access, that is not synchronized to interrupts. However, this probability is greater than zero, and so, given enough repetitions, it is likely to occur. Leaving the correct behavior of a device to the Poisson distribution function should not be considered a good design practice…

This post contains excerpts from the book “El Camino del Conejo“ (The way of the Rabbit), translated with consent and permission from the author.