This blog post is currently at Embedded Related, so go there and read it !

https://www.embeddedrelated.com/showarticle/1540.php

Blog

On hardware state machines: How to write a simple MAC controller using the RP2040 PIOs

Hardware state machines are nice, and the RP2040 has two blocks with up to four machines each. Their instruction set is limited, but powerful, and they can execute an instruction per cycle, pushing and popping from their FIFOs and shifting bytes in and out.

The Raspberry Pi Pico does not have an Ethernet connection, but there are many PHY boards available… take a LAN8720 board and connect it to the Pico; you’re done. The firmware ? Introducing Mongoose…

Mongoose is a networking library designed to make it easy to handle many networking tasks, and across multiple platforms. It can run baremetal on a microcontroller like the RP2040, or with an RTOS (FreeRTOS, TI-RTOS), with or without a third-party TCP/IP stack (lwIP, FreeRTOS TCP); or it can run on an OS (Linux, Windows, MacOS) . It is written by and belongs to Cesanta Software; and it is also the core of Mongoose-OS.

The following is a discussion of the inner workings of a Mongoose code example. This code is open source and belongs to Cesanta, it can be found in this Github repository, from where the code snippets are taken. A higher level tutorial on the example, how to use it, and how to write a driver for Mongoose, is available at the Mongoose site. For details on connecting the hardware and using it, go to either site, or both…

Now, let’s start… As an Electronics Engineer, I’d rather do a bottom-up description, so I’ll start from where the title of this post suggests: the PIO state machines.

The RMII low-level driver

As the Ethernet physical interface is a twisted-pair cable, this reduces the burden: the software MAC controller does not need a CSMA/CD section implemented; even though negotiation is allowed, the driver works in full-duplex mode and so its task is to send and receive frames only.

Due to timing constraints and to keep hardware simple (using out-of-the-box boards) the RP2040 takes its clock from the LAN8720 chip and only the 10BASE-T mode is supported.

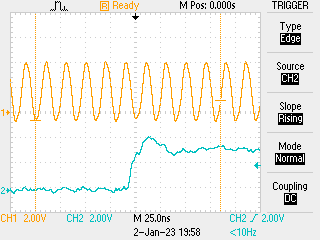

The RMII interface (a Reduced version of the Media Independent Interface) is synchronous, all data exchanged must be valid on the rising edge of a 50MHz master clock. This clock is fed to the RP2040 and both the CPU and the PIO state machines will run synchronous to this clock.

The interface consists of a transmit section and a receive section, in both of them data is exchanged in “di-bits” (a “2-bit nibble” or 2-bit bus), synchronous to the main clock but at 5MHz (2 bits x 5MHz = 10Mbps), with an additional signal each that indicates when data is valid. Frames are exchanged in their whole length, from the first preamble bit to the last CRC bit, LSb first.

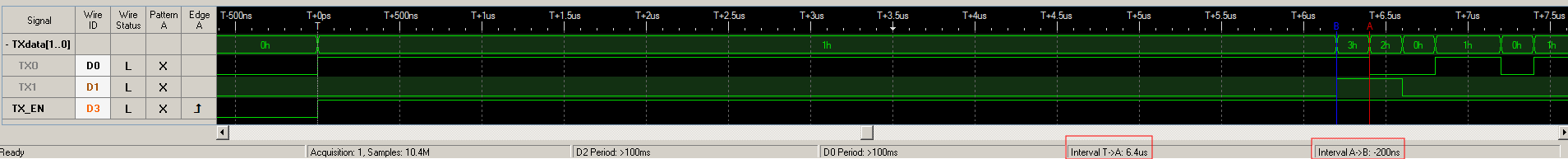

The Ethernet preamble consists of 64 bits, 62 of them are a 5MHz square wave, that is, 1010…. changing every 100ns. The last two bits break that with two consecutive ones: 11, the sync pattern. Then the usual MAC frame follows, ending with the 32-bit FCS (Frame Check Sequence), that is the CRC (Cyclic Redundancy Check) of the whole MAC frame using a specified 32-bit polynomial. Finally, a 12-byte (9.6 μs) silence period must follow, the IFG (Inter-Frame Gap). We must honor all this when sending, and sync to that when receiving.

The RMII interface also includes a management interface, the SMI, consisting of an MDIO bidirectional data line and an MDC clock that according to the specifications must not exceed 2.5MHz. Data is synchronous to this clock with some setup and hold constraints we’ll see later.

Sending a frame

To send a frame, the state machine needs to generate the preamble, then pop data out of the PIO FIFO and shift it to placetwo bits at a time, LSb first, on the pins connected to the PHY. Once a byte has been sent, it will pop another one from the FIFO until there are no more bytes; in which case it will stop and wait for more data. In other words: wait for the FIFO to have data, send the preamble, send the data until the FIFO gets full, and loop to wait again. The data will be pushed to the FIFO with a DMA controller, and the sending process plus the preamble delay is slower than the controller filling the FIFO, so this works.

Here is the PIO assembler code to do that; as the sending loop needs one additional cycle to check for more data in the FIFO, the state machine works at 10MHz, twice the data rate, so most instructions insert a 1-cycle delay to be synchronous to an imaginary 5Mhz clock in phase with the PHY 50MHz clock:

// pins 0, 1 map to TX0, TX1, respectively, and can be set or out. Pin 2 maps to TX_EN and is side_set controlled

// Runs at 10MHz, data is output in di-bits at 5MHz for 10Mbps

.program rmii_tx

.side_set 1

.wrap_target

set pins, 0b00 side 0 // Disable Tx

pull side 0 // Stall waiting for data to transmit

set X, 29 side 0 // load counter for 30 repetitions

set pins, 0b01 side 1 [1] // Enable Tx and Write 0b01, start sending preamble

hdrloop:

jmp X--, hdrloop side 1 [1] // "repeat" pattern 30 times, total 31 = 62-bit preamble

set pins, 0b11 side 1 [1] // Write 0b11, 2-bit sync; +2 = 64

dataloop:

out pins, 2 side 1 // Write data in dibits

jmp !OSRE dataloop side 1 // 2, wrap when no more data in FIFO

.wrapTo send the preamble, the code presents the bits 01 (remember this is LSb first so that will be 10 in the wire) to the PHY with the TX_EN line enabled; this line is controlled with the side-set facility. The code then loops for thirty clock periods (jmp X-- also executes when X is 0), to a total of 31 times, which corresponds to 31 di-bits, 62 bits (6.2 μs). Then it sends the sync pattern, 2 bits (200 ns), total 64 bits (6.4 μs).

Finally, the code sends all data until the FIFO is empty. At that point it wraps around and stalls waiting for the FIFO to be written, condition that will be satisfied when the next frame is presented to be sent.

State machine initialization code follows:

All pins are set as outputs, the TX pins to be controlled by the out and set instructions, and the TX_EN pin by the side-set operation. Since this machine only outputs data, both FIFOs are joined into the Tx FIFO. The machine shifts bytes LSb first.

Receiving a frame

When the PHY detects a valid carrier signal, it raises the CRS_DV line. The PIO state machine waits for this line to be high, then it tries to sync by waiting for a 01 condition on the pins, and then for a 11 condition. Once satisfied, a loop will read two bits at a time from the pins and every time a byte is filled it will be pushed to the PIO FIFO, where a DMA controller will move it to a ping-pong buffer.

// see LAN8720A datasheet: 3.4.1.1, 3.1.4.3

// pins 0, 1, 2 map to RX0, RX1, CRS_DV, respectively, and are inputs

.program rmii_rx

.wrap_target

wait 0 pin 2 [1] // wait for any outstanding frame to end (CRS_DV=0)

wait 1 pin 2 [1] // wait for a frame to start (CRS_DV=1), data may be b00 until proper decoding

wait 1 pin 0 [1] // sync to preamble (b01), uses 2 cycles of the 31-cycle (62-bit) preamble

wait 0 pin 1 [1]

wait 1 pin 1 [1] // wait for start-of-frame indicator (b11)

dataloop:

in pins, 2

jmp pin dataloop // 2, stop collecting when frame ends (CRS_DV=0)

in pins, 2 // but account for 2.5MHz toggling at the end

jmp pin dataloop // 2

mov ISR, NULL

IRQ wait 0 // signal DMA to switch buffers and process this frame, wait to restart

.wrapThe PHY drops its CRS_DV line when it detects the end of the frame; but as it has an internal FIFO, some data may still be waiting in it. Under this condition, the CRS_DV line is toggled on nibble boundaries until the FIFO contents are sent out, so when this line goes down the code will check if it is raised again. If it was, the code will keep looping until a steady low CRS_DV line is detected. If not, the possible extra data in the IRS is cleared and discarded to be fresh for the next frame.

Detected the end of a frame, the code triggers an interrupt so the firmware can switch the DMA buffers and process the frame. Once the firmware has switched to the other buffer in the ping-pong set, it will clear the interrupt and the code will wrap and be ready to receive the next frame, so this should be done in a time shorter than the IFG.

State machine initialization code follows:

All pins are set as inputs, then the CSR_DV pin is assigned to be read by the jmp instruction. Since this machine only inputs data, both FIFOs are joined into the Rx FIFO. The machine shifts bytes LSb first.

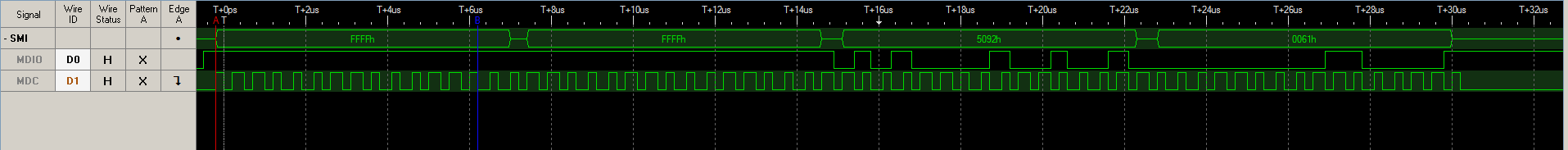

The management interface (SMI)

In the SMI (Simple Management Interface), data is exchanged MSb first in 16-bit quantities over a bidirectional MDIO (Management Data I/O) line, synchronous to the MDC (Management Data Clock) line driven by the MAC controller.

A frame consists of a 32-ones preamble, a 16-bit control word, and a 16-bit data word, driven either by the MAC controller or the PHY depending on the control word. The whole spec is available on the Internet, so you can check it out.

The MAC controller drives the MDIO line during the preamble and most of the control word time, and in the data time when writing. When the MAC controller issues a read command, it releases the MDIO line and lets the PHY take over during the turnaround time.

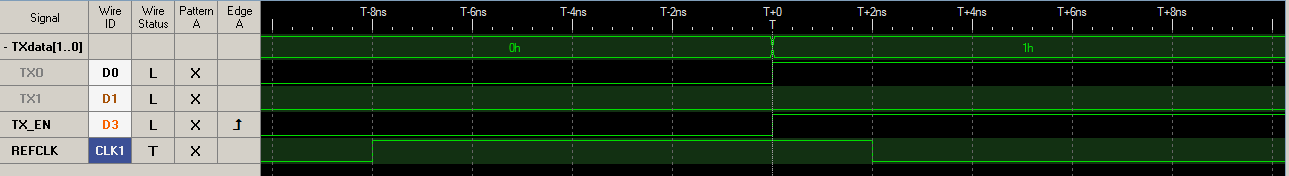

Data must be stable at the rising edge of the clock, MAC controller data has a 10 ns setup and hold times constraint. The PHY must have a maximum access time of 300 ns, so with the fastest clock period of 400 ns (2.5 MHz) the usual (and safe) practice is to read the MDIO line during the second half of the low semi-cycle of the MDC signal.

Writing a register to the PHY is simple:

// pin 0 maps to MDIO, and can be set or out. Pin 1 maps to MDC (clock) and is side_set controlled

// Runs at <10MHz, data is sent at <2.5MHz

.program smi_wr

.side_set 1

start:

set X, 31 side 0

preloop:

set pins, 1 side 0 [1]

jmp X--, preloop side 1 [1] // send preamble (4 clock cycles per bit)

hdloop:

out pins, 1 side 0 [1]

jmp , hdloop side 1 [1] // send header and data until stall

State machine initialization code follows:

Both pins are set as outputs, MDIO can be controlled by the out and set instructions, while MDC is controlled by the side-set operation. The machine shifts 16-bits MSb first.

Reading a register from the PHY is a bit more involved, to be able to divide a 2.5MHz clock in 4 parts, we should run at 10Mhz, which is 50 MHz (the system clock driven by the PHY) divided by 5. To guarantee not to exceed that frequency it is convenient to use a divide by 6 constraint, but calculations can be safely done at 10MHz as working slowly will be safer.

// pin 0 maps to MDIO, and can be in, set or out. Pin 1 maps to MDC (clock) and is side_set controlled

// Runs at <10MHz, data is read at <2.5MHz on the second half of the low clock period

.program smi_rd

.side_set 1

start:

set X, 31 side 0

preloop:

set pins, 1 side 0 [1]

jmp X--, preloop side 1 [1] // send preamble (4 clock cycles per bit)

set X, 13 side 1 // ignore 2 LSb in header (turnaround)

hdrloop:

out pins, 1 side 0 [1]

jmp X--, hdrloop side 1 [1] // send header

set pindirs, 0 side 0 [1] // set to input, turnaround: bit 1

set pins, 1 side 1 [1] //

nop side 0 [1] // bit 2

set X, 15 side 1 [1] // read 16 bit data

rdloop:

nop side 0

in pins, 1 side 0 // read on second half for

jmp X--, rdloop side 1 [1] // 300ns max access time

.wrap_target

set pindirs, 1 side 1 // drive bus high again, "wait"

.wrapPerhaps the tricky part is to change the pin direction in the middle of the transfer, see how the code writes to pindir to set the pin as an input and then at the end sets it back as an output. Following is the state machine initialization that makes this possible, see how both write and read refer to the same pin:

The MDC pin is set to be controlled by the side-set operation as before, but the MDIO pin is not only configured to be controlled by the out and set instructions but also to be read by the in instruction. The machine shifts 16-bits MSb first.

The Mongoose driver

Mongoose’s TCP/IP stack driver API is simple and elegant. As can be seen on this tutorial, it just requires writing a set of four functions (send, receive, interface is up, initialize) and there is a lock-free queue that can be used to decouple asynchronous environments like interrupt contexts.

Interface with the PHY

This is very simple and basic, as all the work is done by the PIO state machines. The functions prepare a 16-bit half-word containing the command to be written to the PIO FIFO and initialize the corresponding state machine.

The write function then writes command and data to the FIFO, while the read function writes the command and waits for the data to be pushed back by the state machine:

Sending a frame

To send a frame, the driver checks any former DMA operation has finished, by blocking on the DMA channel. Then, the data is copied to the send buffer (just in case the frame length is less than the Ethernet minimum, the buffer’s first 60 bytes are previously filled with zero and the length is trimmed to be 60 or more bytes). After this, the CRC is calculated and appended to the frame. Finally, a minimal wait enforces that the IFG is not violated and then the buffer is handed to the DMA channel to be sent.

Receiving a frame

As said above, the PIO state machine synchronizes to the preamble and detects the end of the frame, generating an interrupt.

The corresponding interrupt handler first reads the DMA channel to get the number of bytes left to be transferred; as the transfer was initialized to the maximum possible frame length, subtracting from that number gives the received frame length.

Then, the DMA channel is stopped, and reinitialized with the complementary buffer in the ping-pong set; then the PIO state machine gets its interrupt request acknowledged so it can wrap and continue receiving frames.

The received frame is then pushed to the lock-free queue as soon as possible as this is IRQ handler context.

Later, when the Mongoose event manager calls its built-in TCP/IP stack, it in turn will call this driver receive function.

This function checks the lock-free queue. If there is an outstanding frame, it is retrieved and its CRC checked; if it is correct, then the frame is delivered to Mongoose to be processed

Checking the link state

In this function, the driver asks the PHY for its status, reading the BSR (Basic Status Register), which contains the link state information that will be returned to Mongoose:

Initialization

The driver initialization code checks if the configuration specifies a queue size, otherwise it configures a default size of 8192 bytes.

Then the PIO state machine programs are loaded into the PIO memory areas. The RMII data machines and the SMI transmit machine take a big portion of the PIO0 memory; unfortunately there is not enough room left for the SMI receive machine, so this one uses PIO1:

Then, DMA is configured for the RMII data machines, and these are initialized.

The RMII data transmit DMA channel is configured to read from memory with auto increment and always write to the same address (the transmit machine FIFO). Transfer size is configured in bytes, and the machine is initialized and started, so it will stay waiting for data to be available in its FIFO to start sending a frame:

The PHY is initialized next, auto-negotiation is enabled but only for 10Mbps, this allows working with switches and (good old) hubs. A sleep call is inserted between calls so the PIO state machine is able to stop after the data byte (observing its FIFO empty) and wrap around its program:

Finally, the RMII receive DMA channel is initialized to read from a fixed address (the FIFO) and write with autoincrement to memory. Transfer size is also configured in bytes, and the channel is started right away with a maximum frame size transfer length. Then the PIO IRQ is configured and enabled, and then the PIO state machine is enabled; it will start the DMA data transfer when it synchronizes and starts pushing bytes to its receive FIFO:

Can this be improved ?

It always can…While writing this post, some possible improvements came to mind, please excuse me for brainstorming out loud. I wonder if we’ve already hit the sweet spot in the law of diminishing returns, but exercising our minds is always good.

The receiver could certainly be improved.

We could have two machines running the same program (no extra PIO memory usage), synced by an IRQ flag, let’s call it ‘x‘:

wait irq x // wait for the start order

...

irq x nowait // signal the other state machine to take over

irq 0 wait // signal the cpu there is an outstanding frameBoth machines would run the same program, but they would be configured with two different buffers. When the first machine finishes receiving a frame, it raises the internal IRQ flag and the second one takes over. The former then signals the system and waits for the CPU to acknowledge. Once the CPU has read the buffer, it will wrap and wait for the second machine to finish receiving to take over again. Let’s devise later how to start only one of the machines when the system starts up… probably starting at other address…

This would eliminate the 12μs constraint on switching DMA buffers, but a frame still must be dealt with before the other buffer fills up…

The next step would probably be to use more state machines, with more IRQ flags (which means different code but probably a jmp will do); for example, having four buffers will give us more time to handle a frame. Or, we could just have these two machines and a ping-pong buffer on each one, that would give us a total of four buffers; having to fill three of them would probably give us enough time to deal with a frame, and the two-machine strategy has already eliminated the buffer switching constraint.

Remote development with MQTT and a little help from my friend

Not too long ago, my friend Diego contacted me to solve a technical issue for an art event. He had this idea of a neon sign, well, two actually, the message was split in half with a strong change in meaning and he wanted the second half to activate once a visitor was actually observing the photos that carried other dimension to the message.

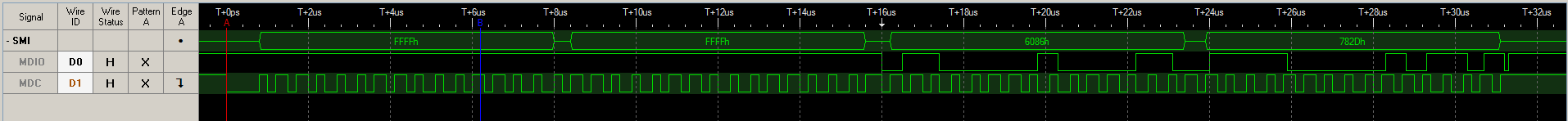

We talked for a while and ruled out motion sensors, people stay still while watching photos. I wanted to try those new time-of-flight distance measurement chips, but their field of view was extremely narrow and we needed to cover a 4-meter wide distance two meters away from the piece of art, and we couldn’t do anything to the lateral walls…

As not long ago I’ve been designing a liquid level measurement device based on Acconeer‘s radar chips, I suggested we could use two of them to cover the area.

Under “normal” circumstances I would design something specific with a microcontroller, develop in C, and rely on Mongoose or Mongoose-OS if I needed comms. We had little more than a month to do this, I was busy with other projects, the event would take place in Miami, I live several thousands of miles south, and my friend is an artist… Diego is computer literate, we met decades ago at the Amiga forums in the Fido network (yes, we used dial-up modems and sent already written messages), but I needed to be able to calibrate the radars and make corrections to the software from my place.

I suggested to use a Raspberry Pi with WiFi, a relay board to control the lights, and two radar modules with USB connection. Diego bought them all and soon had them connected at his place; he also added a subscription to VNC so we both were able to access the RPi behind firewalls.

I chose Python for the software, that would allow me to try things on the fly and modify at will with remote access. I wrote a couple of mock libraries and developed the software on my computer (I didn’t have a Raspberry Pi), I already had one sensor and the manufacturer library provided simulation for the second one.

One design choice was to use Paho-MQTT for communications. Not only would this device report any activity (that we could later analyze to take information on visitor activities), but I needed to be able to see what the radars were seeing in order to properly configure their gain and sensitivity.

Once developed, the software was uploaded to a Github repository, then I connected to the RasPi via VNC and cloned the repo there. The radar modules came without a firmware loaded, so I uploaded it to the RPi along with the microcontroller flashing tool, asked Diego to press the proper buttons (we communicated through Whatsapp) on the modules and flashed them. Wrote a small piece of code so he could test the relays, and everything was ready for the hard part.

The Python program reads both sensors raw data and using Acconeer Exploration Tool (in algorithm mode) processes five readings per second and measures the distance to the closest peak detected by a CFAR algorithm. If that peak is at the desired distance range, which we estimated at a visitor typical viewing distance from 60cm to 2.5m, the program activates the relay that turns on the complementary neon sign (though they actually are LED signs in neon color…) and keeps it on for 10 seconds after all activity ceases. Every action, and no action for 1 minute, is published on a well-known (for us) MQTT topic on HiveMQ’s free broker. We could then know if the device is working by just subscribing to that topic. Paho-MQTT runs as a separate thread, the whole program in the main thread.

Besides that, I also added a small set of RPC calls, the device listens on a topic and I, from my lab, publish questions asking the status and providing a rendezvous point (the topic where the device will publish its response) and get the average data, the threshold, and any detection, inside a JSON object. This is done with a separate Python program, also based on Paho, running on my workstation. This program then uses pyplot (I’m used to MATLAB so…) to graph the readings and provide me with an idea of what is going on in Miami.

The hard part was finding the sweet spot of both sensor orientation and gain/sensitivity to achieve proper human detection with minimum false positives. We humans are not good microwave reflectors so the SNR was really poor. We asked but the art gallery personnel said dressing the visitors in full metal jacket was not an option, so Diego would walk the area phone in hand, texting me via Whatsapp, while I, 7000Km away, would watch the graphs and tweak gain and sensitivity.

Happily, we were able to get this device working OK right on time, and my friend enjoyed the reception at the art gallery while I grabbed a beer at home.

MQTT over TLS-PSK with the ESP32 and Mongoose-OS

This article is an extension to this one; I suggest you read it first.

Should you need more information on the MQTT client and using it with no TLS, read this article. For a bit more on TLS, this one.

TLS-PSK

There is a simpler solution to using full-blown TLS as we’ve seen on our first article; instead of using certificates, broker and connecting device can have a pre-shared key (PSK).

The connection process is similar, but in this case, instead of validating certificates and generating a derived key, the process starts with the pre-shared key. Depending on the scheme being used, demanding Public Key Cryptography operations can be avoided, and key management logistics can be a bit simpler, plus, we don’t need a CA (Certification Authority) anymore.

Even though there are currently three different ways to work, where one of these allows broker validation using certificates and device validation using a PSK, we’ve only tested the simplest form.

Configuration

Let’s configure the ESP32 running Mongoose-OS to use TLS-PSK.

libs: - origin: https://github.com/mongoose-os-libs/mqtt # Include the MQTT client config_schema: - ["mqtt.enable", true] # Enable the MQTT client - ["mqtt.server", "address:port"] # Broker IP address (and port) - ["mqtt.ssl_psk_identity", "bob"] # identity to use for our key - ["mqtt.ssl_psk_key", "000000000000000000000000deadbeef"] # key AES-128 (or 256) - ["mqtt.ssl_cipher_suites", "TLS-PSK-WITH-AES-128-CCM-8:TLS-PSK-WITH-AES-128-GCM-SHA256:TLS-ECDHE-PSK-WITH-AES-128-CBC-SHA256:TLS-PSK-WITH-AES-128-CBC-SHA"] # cipher suites

The most common port for MQTT over TLS-PSK is 8883. The broker can also request we send a username and password, though the usual stuff is to configure it to take advantage of the identity we’re already sending, as we did on these tests (example below).

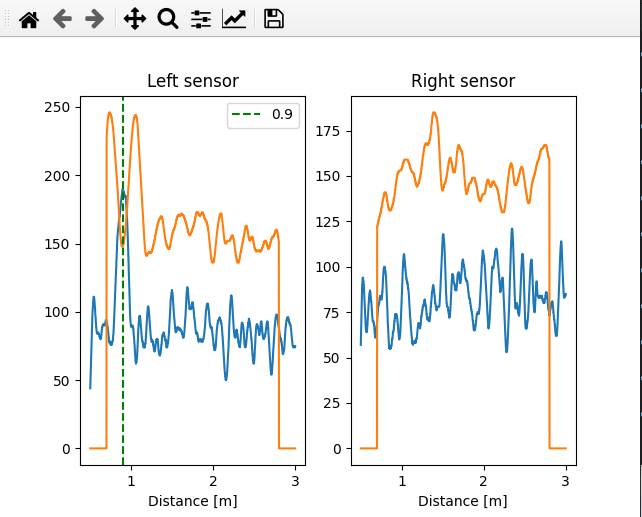

Regarding cipher suites, we need to provide a set of cryptographic schemes supported by our device, and at least one of them must also be supported by the broker. This is a mandatory parameter, otherwise our device won’t announce any TLS-PSK compatible cipher suite and TLS connection will not take place. Usually we negotiate this with the broker admins.

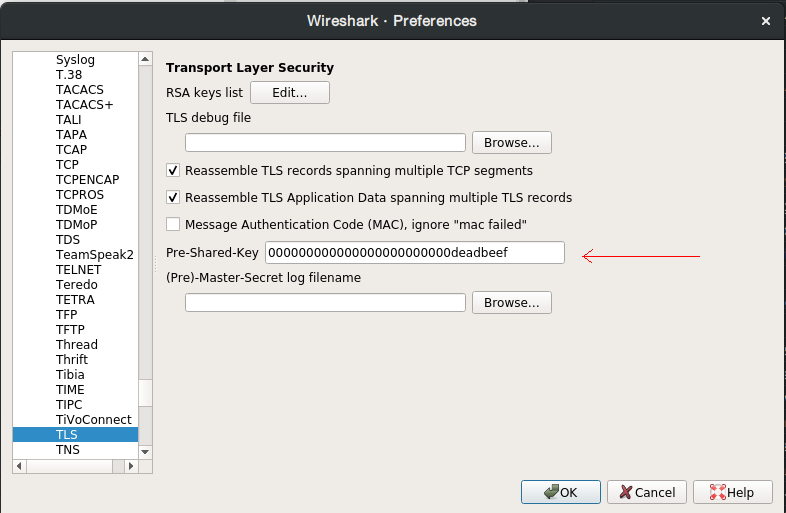

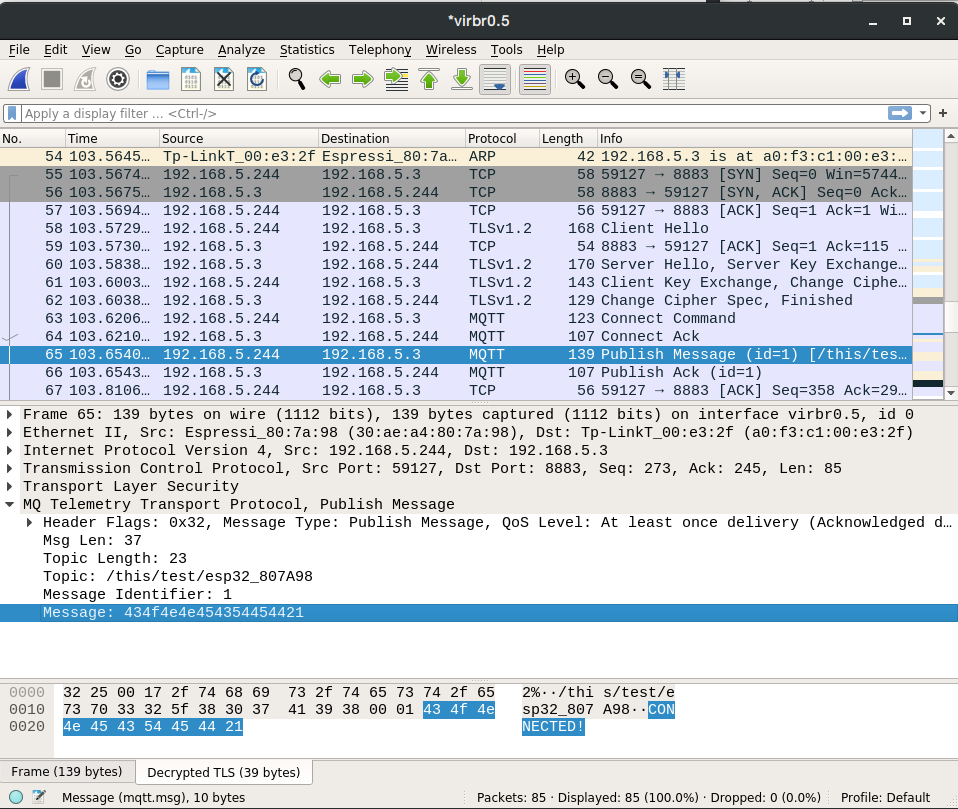

When starting the TLS connection, the device sends its supported cipher suites in its ClientHello message; the broker then chooses one that it supports and indicates this on its ServerHello message. As we’ve obtained this list by analyzing the source code, we decided to publish it here so it can be of use. In our particular case, only one of the device cipher suites was also supported by the broker: TLS-PSK-WITH-AES-128-CCM-8. The start handshake with these messages can be seen at the Wireshark snapshot below.

Finally, our pre-shared key (PSK, parameter ssl_psk_key) must be a 128-bit (16-byte) quantity for AES-128 suites and a 256-bit (32-byte) quantity for AES-256 suites.

Operation

At startup, we’ll watch the log and check if everything is going on as it should, or catch any possible errors. In this sad case, it is pretty hard to properly determine what is going on without a sniffer, as both sides are often not very verbose nor precise. The handshake, as well as the MQTT messages inside TLS, can be observed with a sniffer, by introducing the proper key (see the example using Wireshark below).

[Mar 31 17:28:32.349] mgos_mqtt_conn.c:435 MQTT0 connecting to 192.168.5.3:8883 [Mar 31 17:28:32.368] mongoose.c:4906 0x3ffc76ac ciphersuite: TLS-PSK-WITH-AES-128-CBC-SHA [Mar 31 17:28:32.398] mgos_mqtt_conn.c:188 MQTT0 TCP connect ok (0) [Mar 31 17:28:32.411] mgos_mqtt_conn.c:235 MQTT0 CONNACK 0 [Mar 31 17:28:32.420] init.js:34 MQTT connected [Mar 31 17:28:32.434] init.js:26 Published:OK topic:/this/test/esp32_807A98 msg:CONNECTED!

Brokers: Mosquitto

This is a minimal configuration for Mosquitto, take it as a quick help and not as a reference. Paths are in GNU/Linux form and we should put our keys there.

listener 8883 192.168.5.1 log_dest syslog use_identity_as_username true # so the broker does not ask for username in the MQTT header psk_file /etc/mosquitto/pskfile psk_hint cualquiera # any name

Parameter psk_hint serves as a hint for the client on which identity to use, in case it connects to several places; we don’t use it in this example.

The file pskfile must contain each identity and its corresponding pre-shared key; for example, for our user bob:

bob:000000000000000000000000deadbeef

Example

Companion example code available in Github.

Sniffers: Wireshark

With any sniffer we are able to see the TLS traffic, but not its payload

With Wireshark, we can decrypt TLS-PSK entering the desired pre-shared key in Edit->Preferences->Protocols->TLS->Pre-Shared-Key.

We are then able to see MQTT traffic as TLS payload.

MQTT over TLS with the ESP32 and Mongoose-OS

Mongoose-OS has a built-in MQTT client supporting TLS. This allows us to authenticate the server, but also the server infrastructure is able to authenticate the identity of incoming connections, that is, to validate that they belong to authorized users.

For further information on the MQTT client and using it without TLS, read this article. For more information on TLS, read this one.

Configuration

We’ll configure the ESP32 running Mongoose-OS to use the MQTT client and connect to a WiFi network. Since we are going to connect to a server (the MQTT broker), this is perhaps the simplest and fastest way to run our tests. This action can be done manually using RPCs (Remote Procedural Calls), writing a JSON config file, or it can be defined in the YAML file that describes how the project will be built. We’ve chosen this last option for our tests.

libs: - origin: https://github.com/mongoose-os-libs/mqtt # Include the MQTT client config_schema: - ["mqtt.enable", true] # Enable the MQTT client - ["mqtt.server", "address:port"] # Broker IP address (and port) - ["mqtt.ssl_ca_cert", "ca.crt"] # Broker certificate, required - ["mqtt.ssl_cert", "sandboxclient.crt"] # our certificate, for mutual authentication - ["mqtt.ssl_key", "sandboxclient.key"] # our key, for mutual authentication

The most common port for MQTT over TLS is 8883. The broker can also ask us to send a username and password, full configuration details are available at the corresponding Mongoose-OS doc page. Finally, it is the broker who decides which type of authentication to use (one- or two-way), though we must provide our certificate when the broker asks for it.

Operation

Before we build our project and execute our code, it is perhaps convenient to have an MQTT client connected to the broker, so we can see the message the device will send at connect time. We’ve used a broker we installed in a server in our lab and connected to it with no authentication, so all we’ve shown in the MQTT article is valid here too.

After the code is compiled and linked (mos build) and the microcontroller’s flash is programmed (mos flash) using mos tool, we’ll watch the log and check if everything is going on as it should, or catch any possible errors. Remember we need to configure the proper access credentials to connect to our WiFi network of choice (SSID and password), and the address and port for the MQTT broker we are going to use. At the end of this note we show how to configure a Mosquitto broker.

One-way authentication

This way we can authenticate the server, that is, we can trust it is the one to whom we want to connect, but the server has no idea who can we be.

This is the simplest configuration and we only have to provide the CA certificate filename in the parameter ssl_ca_cert, the certificate has to be stored in the fs directory. This indicates the broker has to be validated.

[Mar 31 17:21:18.360] mgos_mqtt_conn.c:435 MQTT0 connecting to 192.168.5.3:8883 [Mar 31 17:21:18.585] mongoose.c:4906 0x3ffca41c ciphersuite: TLS-ECDHE-RSA-WITH-AES-128-GCM-SHA256 [Mar 31 17:21:20.404] mgos_mqtt_conn.c:188 MQTT0 TCP connect ok (0) [Mar 31 17:21:20.414] mgos_mqtt_conn.c:235 MQTT0 CONNACK 0 [Mar 31 17:21:20.423] init.js:34 MQTT connected [Mar 31 17:21:20.437] init.js:26 Published:OK topic:/this/test/esp32_807A98 msg:CONNECTED!

Two-way (mutual) authentication

This way we can authenticate the server and the client, that is, now the server knows we should be the one who our certificate says we are. This dual process requires lots of processing and we’ll notice that connection establishment takes longer. In this configuration we must provide our certificate and key in the fs directory, and configure parameters ssl_cert and ssl_key appropriately; otherwise the broker might give us a clue in its log, as Mosquitto does:

Mar 31 17:30:52 Server mosquitto[2694]: OpenSSL Error: error:140890C7:SSL routines:ssl3_get_client_certificate:peer did not return a certificate

Brokers: Mosquitto

These are minimal configurations for Mosquitto, take them as a quick help and not as a reference. Paths are in GNU/Linux form and we should put our certificates there.

One-way authentication (broker authentication)

listener 8883 192.168.5.1 log_dest syslog cafile /etc/mosquitto/certs/ca.crt certfile /etc/mosquitto/certs/server.crt keyfile /etc/mosquitto/certs/server.key

Mutual (two-way) authentication

listener 8883 192.168.5.1 log_dest syslog cafile /etc/mosquitto/certs/ca.crt certfile /etc/mosquitto/certs/server.crt keyfile /etc/mosquitto/certs/server.key require_certificate true

Example

Companion example code available in Github, here and here. We’ll also need server credentials, I left a set here so we can do this easily.

Timer expired, I think…

Quite often we have several ways to perform a task. Sometimes we choose based on personal taste or affinity, others there is a context that enforces certain rules that limit our safe choices.

When working with software timers, I usually choose to use an old scheme based on multiple independent timers that has found its way through the assembly languages of many different micros and has finally landed in C. Sometimes, due to 32-bits being available, I may choose to simply check the value of a counter. In both cases, each timer and the counter are decremented and incremented (respectively) in an interrupt handler.

This requires that (in C) we declare this variable volatile so the compiler knows that this variable might change at any time without the compiler taking action in the surrounding code. Otherwise, loops waiting on this variable will be optimized out.

extern volatile uint32_t tickcounter;On the other hand, and due to the same reason, the access to this variable must be atomic, that is, it has to occur in a single processor instruction (or a non-interruptible sequence of them). Otherwise, the processor might accept an interrupt request in the middle of the instruction sequence that is reading the individual bytes forming this variable, and if this interrupt modifies this variable we would end up with an incorrect value. This happens, for example, if we use 32-bit counters/timers on most 8-bit micros; more info on this subject in another post.

Once we solve this issues, then there is the problem of counter length, the number of bits defining integer word size for our timers.

My timer scheme above is quite simple: each timer is decremented if it has a non-zero value, so to use one of this I simply write a value and then poll until I get a zero. There is obviously a jitter as there is a time gap from the moment I write the timer till it’s decremented by the interrupt handler, and another one since the timer reaches zero and the application reads the value.

However, reading a counter, on the other hand, poses some issues; and their solutions were the inspiration to write this post. Because of atomicity, we might end up being forced to use a small variable, a short length counter that will overflow in a short time.

What happens when this counter overflows ? Well, maybe nothing happens, if we take precautionary measures.

Everything will work as a charm and all tests will pass as long as the counter doesn’t overflow between readings, that is, counter length is enough for the application lifetime. For example, a 32-bit seconds counter takes 136 years to overflow. However a 16-bit milliseconds counter overflows every minute and fraction (65536 milliseconds).

If the time between counter readings is larger, there is the possibility that the counter may overflow more than once between checks. In this situation, we must use an auxiliary resource, as the counter alone can’t do it.

If, however, we can be sure that the counter will overflow at most once between checks, we just need to write proper code in order for our comparison to survive this overflow. Otherwise, as we’ve already said, everything will work fine most of the time, but “sometimes” (every time there is a counter overflow) we’ll get a glitch.

For the sake of this analysis, let’s consider the following scheme, we are using uppercase names in violation of some coding styles but we do it for counter highlight’s sake:

extern volatile uint32_t MS_TIMER;

uint32_t interval = 5000; // milliseconds

uint32_t last_time;The following code snippets work fine, as the difference survives counter overflow and can be correctly compared to our desired value:

last_time = MS_TIMER;

if(MS_TIMER - last_time > interval)

printf("Timer expired\n");

if(MS_TIMER - interval > last_time)

printf("Timer expired\n");On the other hand, this does not work properly

uint32_t deadline;

/* I must not do this

deadline = MS_TIMER + interval;

if(MS_TIMER > deadline)

printf("Timer expired\n");

*/If, let’s say, MS_TIMER is 0xF8000000 and interval is 0x10000000, then deadline will be 0x08000000 and MS_TIMER is clearly larger than deadline from the beginning, this causes the timer to expire immediately (and that is not what we want…).

A workaround is to use signed integers, and this works with time intervals up to MAXINT32 units:

int32_t deadline;

int32_t interval = 5000; // milliseconds

deadline = MS_TIMER + interval;

if((int32_t)(MS_TIMER - deadline) > 0)

printf("Timer expired\n");To dive into more creative ways might result in timers that never expire when the counter is higher than MAXINT32, as this is seen as a negative number and so smaller than any desired time (that is a positive number); but do work when our device is started or reset. These can be considered as errors when choosing counter length (including sign).

As we’ve seen, some problems show themselves when referring to a time that is MAXINT32 time units after the system starts. This is usually 2^31, and this, for a milliseconds counter, is around 25 days; and for a seconds counter about 68 years. There can be several errors lurking deeply inside some Linux (and other Un*x-like) systems that will surface once they need to make reference to a time after year 2038 (the seconds counter starts at the beginning of year 1970).

If, on the other hand, we use 16- or 8-bit schemas…

In these examples we used ‘greater than’ in comparisons; this guarantees that the time interval will be longer than requested. If, for example, we set it right now and ask for a one second interval, and one millisecond later the interrupt handler increments the counter, should we use a ‘greater than or equal’ comparison our timer will expire immediately (well, in 1ms…). Also, if we set it 1ms after the interrupt took place it will expire in 1,999s instead of 1s. In a scenario where increment and comparison are not asynchronous, we can avoid jitter and use a ‘greater than or equal’ comparison.

MSP430

Escribí este texto por allá por el 2003… no recuerdo haberlo corregido, puede tener desde inconsistencias y errores hasta horrores ortosemantácticos. Lo dejo aquí por si a alguien le resulta útil o el ejército de buscadores lo atrae al resto del contenido, que debe ser al menos unos casi veinte años mejor.

Introducción

La serie MSP430 de Texas Instruments es un conjunto de microcontroladores RISC de 16 bits de ultra bajo consumo, con una serie de periféricos sumamente interesantes, interconectados en un mapa de memoria lineal con arquitectura Von Neumann, es decir, buses integrados de direcciones y datos para instrucciones y datos. Tanto RAM como flash, SFRs (Special Function Registers, registros para funciones especiales) y periféricos son direccionados por un único bus de direcciones y entregan sus datos en un único bus de datos; ambos internos. Un sistema de reloj altamente flexible permite que el procesador pueda elegir la frecuencia de operación de la CPU y de diversos periféricos de entre las opciones de clocking disponibles, minimizando el consumo cuando no se requiere la operación de alguno.

Características principales

- Arquitectura de ultra-bajo consumo

- Retención de datos en memoria RAM interna con un consumo de 0,1 uA

- Operación como reloj de tiempo real con un consumo promedio estimado de 0,8uA

- Consumo en estado activo promedio de 250uA, a una velocidad de operación de 1 MIPS (un millón de instrucciones por segundo)

- Conversores analógico-digitales de 10 ó 12 bits, de 200Ksps (doscientas mil muestras por segundo), con sensor de temperatura y generador de tensión de referencia integrados

- Conversores digital-analógicos de 12 bits

- Temporizadores (timers) comandados por un comparador interno, permiten medir elementos resistivos

- Supervisión de la tensión de alimentación

- CPU de 16 bits, RISC (Reduced Instruction Set Code)

- Gran cantidad de registros, de modo de eliminar cuellos de botella

- Set de instrucciones optimizado para programación de alto nivel

- Sólo 27 instrucciones y 7 modos de direccionamiento

- Interrupciones vectorizadas

- Programación en sistema de la memoria flash interna; permitiendo flexibilidad para la actualización del código y captura de datos (data logging)

El consumo del chip en el estado inactivo es del orden de 1uA. El consumo en estado activo, con una tensión de alimentación de 3V, y con un clock de 1MHz, es de aproximadamente 250uA. El MSP430 puede pasar de un estado al otro en un máximo de 6us, permitiendo que el programador mantenga un consumo extremadamente bajo y a la vez sea capaz de atender una interrupción en un tiempo sumamente pequeño.

Todos los periféricos del chip han sido optimizados para lograr un máximo control sobre el consumo del sistema, su funcionamiento es independiente del estado de la CPU, por lo cual es posible mantener un timer con funciones de RTC (Real Time Clock, reloj de tiempo real), para la ejecución de tareas periódicas, mientras el chip consume cerca de 1 µA. También es posible esperar el pulsado de una tecla, datos de un port serie, o realizar la tarea de refresco de un display LCD mientras la CPU duerme. Poseen un estado adicional en el cual son capaces de retener los datos en registros y RAM con un consumo de 0,1 µA, de este estado sólo es posible salir a través de una interrupción externa o reset, ya que ninguno de los clocks está operativo.

Sistema de clocking

El sistema de relojes ha sido diseñado expresamente para aplicaciones con alimentación a baterías. Posee un reloj auxiliar (ACLK), que puede funcionar directamente con un cristal de 32KHz (ideal para aplicaciones de RTC) o con un cristal de alta frecuencia, agregando los capacitores correspondientes. Posee además un oscilador controlado digitalmente (DCO, Digitally Controlled Oscillator) de alta velocidad, que puede proveer el reloj maestro (MCLK) para la CPU y los periféricos más rápidos y/u otro reloj adicional (SMCLK) para otros periféricos. Las características de diseño de este DCO garantizan que esté activo y estable en menos de 6us luego de su inicio, de modo que las soluciones basadas en MSP430 puedan aprovechar al máximo las características de alta performance de la CPU utilizando su procesamiento en ráfagas cortas, como por ejemplo la atención de eventos por interrupciones.

Algunos modelos incluyen un segundo oscilador de alta frecuencia para aumentar las opciones de clocking disponibles.

En resumen, tenemos básicamente tres fuentes de reloj: ACLK, MCLK y SMCLK. ACLK toma reloj del oscilador a cristal; MCLK y SMCLK derivan su reloj de un oscilador a cristal o del DCO, a elección del programador. Es posible intercalar un divisor (prescaler) para bajar la frecuencia de reloj en cada una de ellas. La CPU toma reloj de MCLK, y los diversos periféricos incorporan opciones para seleccionar la fuente de reloj y algunos incluyen, a su vez, otros divisores. Todos los osciladores pueden ser prendidos y apagados a voluntad, para minimizar el consumo, y la CPU puede “desconectar” su fuente de reloj, para pasar a los estados de bajo consumo.

CPU

La CPU del MSP430 es un RISC de 16 bits. El direccionamiento de memoria es lineal (no existen bancos ni paginado ni segmentado de ningún tipo) y puede hacerse por bytes o por words. El acceso por words requiere que éstas estén alineadas, es decir, comenzando en una dirección par. La figura a la izquierda muestra el mapa de memoria, donde se aprecia la integración de RAM, flash, SFRs y periféricos en un mismo espacio de direccionamiento lineal.

El set de instrucciones es altamente ortogonal; con muy pocas excepciones todas las instrucciones pueden utilizarse en todos los registros con cualquier modo de direccionamiento. Esto resulta una amplia ventaja sobre otras arquitecturas anunciadas como RISC pero que necesitan de un registro especial que es operando forzado de todas las operaciones, al igual que el acumulador en la mayoría de los CISC. En el MSP430, cualquier registro es fuente y cualquiera es destino, para cualquier modo de direccionamiento, excepto el modo indirecto, que sólo puede ser empleado para el operando fuente.

Dada la reducida cantidad de instrucciones, resulta sumamente fácil y rápido el aprendizaje. Dada la gran cantidad de modos de direccionamiento, el programador se dedica a resolver su algoritmo en vez de luchar contra la arquitectura de la CPU.

El código generado es sumamente compacto, y compite con otros procesadores de 8 bits de similares características. A la hora de comparar, debe tenerse en cuenta que si bien las instrucciones y datos en el MSP430 son de 16 bits, la flexibilidad de la arquitectura generalmente permite realizar la tarea deseada con menos instrucciones. Para portar una aplicación desarrollada en un CISC a la arquitectura del MSP430 y poder relizar una comparación, es muy probable que se deba re-escribir el código pensándolo de forma diferente y haciendo uso de conceptos que le son más propios. Por ejemplo, es muy común en muchos CISC y algunos pseudo-RISC el utilizar instrucciones de tipo “prueba y salto” (test and branch), es decir, chequear un flag en una dirección de memoria o registro y saltar si está activo. En el MSP430, es muchísimo más efectivo utilizar un esquema más parecido a una máquina de estados, es decir, utilizar “bytes de estado” (status bytes), que guardan el estado actual. El salto a una u otra parte del código se realiza simplemente sumando esa variable o registro al Program Counter (PC).

Para aquellos de nosotros acostumbrados a algunas instrucciones comunes en los CISC, el assembler del MSP430 emula algunas instrucciones aceptando el mnemónico y generando código RISC equivalente, por ejemplo: para desplazar a la izquierda un registro, algunos CISC incorporan una instrucción como por ejemplo RLA (rotate left aritmetically, rotar a la izquierda insertando ceros por la derecha), siendo que ya la tienen, dado que sumar un registro a sí mismo constituye una multiplicación por dos y en aritmética binaria eso es lo mismo que desplazar el registro a la izquierda. Por este motivo, el assembler del MSP430 acepta la instrucción RLA <operando> y genera el código ADD <operando>,<operando>.

El set de registros está compuesto por 16 registros, de éstos, cuatro son utilizados como PC (program counter), SP (Stack Pointer), SR (Status Register, parte baja de R2) y CG (Constant Generator, parte alta de R2 y todo R3), los restantes 12 registros pueden usarse como acumuladores o punteros de uso general definidos por el usuario. Puede observarse la potencialidad de esta arquitectura extremadamente versátil, de gran poder de cálculo y que resulta muy eficiente para ser programada en lenguaje C, o bien para lograr un programa bien estructurado. El CG utiliza el par R2/R3, que emula mediante modos de direccionamiento algunas constantes de uso típico, como 0, 1, -1, etc, evitando, de este modo, la necesidad de utilizar una palabra de 16 bits para esta constante. Por ejemplo, la instrucción CLR <operando> es reemplazada en primera instancia por el assembler por el código MOV #0, <operando>. Analizando el código de máquina, vemos que su estructura (desglose de los campos de operando fuente y destino, y modo de direccionamiento fuente y destino) corresponde a la siguiente instrucción: MOV R3, <operando>. La utilización del registro R3 en modo de direccionamiento de registros como operando fuente, indica que el CG contiene el valor 0. Este es un concepto muy complejo que veremos en más detalle al analizar la CPU; la idea de exponerlo aquí es mostrar cómo es posible economizar espacio de memoria de programa.

Programación, escritura y depuración del código (debugging)

Los MSP430 pueden programarse y accederse mediante una interfaz JTAG. Existen notas de aplicación de Texas Instruments en las cuales pueden apreciarse circuitos de aplicación (SLAA149).

Además, incorporan una ROM con un programa interno que permite que el microcontrolador pueda recibir paquetes de un formato especial y programar la memoria interna. Esta particularidad se denomina “bootstrap loader” y utiliza algunos recursos del procesador, que si bien pueden reusarse para otras aplicaciones, deberán ser tenidos en cuenta. La ejecución del código en esta ROM comienza cuando el procesador detecta una secuencia especial en un par de pines, al momento de reset. Existen notas de aplicación de Texas Instruments en las cuales se indica el hardware necesario para aprovechar esta característica especial (SLAA096).

De este modo, es posible, con mínimo hardware, disponer de una interfaz para realizar programación y actualización del programa en circuito, es decir, sin necesidad de retirar los micros de la placa.

Por supuesto que, además de ésto, el fabricante ofrece herramientas de desarrollo y programación. Los FET (Flash Emulation Tool) son herramientas que permiten grabar el dispositivo y trabajar sobre el sistema. Si bien su costo es bastante bajo, dada la disponibilidad de soluciones abiertas en la forma de notas de aplicación, y a su espíritu constructor, dichas herramientas no han sido evaluadas por el autor. El circuito esuqemático de varios FET está disponible en la página web de Texas Instruments, con lo cual es posible construírse una económica interfaz JTAG para port paralelo de PC que permite programar y depurar paso a paso.

El entorno de desarrollo “oficial”, al momento de escribir este documento, es el IAR Embedded Workbench de IAR Systems, obtenible en forma gratuita de la página web de Texas Instruments. Se trata de una versión especial que provee el compilador C, pero limitado a generar 1K de código objeto como máximo. Esto no resulta en disminución alguna para los amantes del assembler, que encuentran en éste un agradable entorno de desarrollo; pudiendo trabajar de forma modular, ya que el proyecto tiene su definición, los archivos involucrados, y el proceso de ensamblado y/o compilado es seguido por un linkeado que maneja el tema de la relocalización. Por supuesto que quienes así lo deseen pueden optar por comprar la versión que viene con el FET, o la versión completa sin restricciones para el compilador.

Entre las opciones open source, existe un port del tradicional GCC, disponible en la Internet, que nos permite compilar ANSI C sin restricciones.

Memoria

Encontramos memoria RAM y flash ó ROM, según el modelo. La dirección de inicio de la flash/ROM depende de la cantidad presente en el chip, de modo que la dirección final siempre sea 0FFFFh, para poder almacenar los vectores de interrupción y reset sin necesidad de incorporar un bloque adicional de flash, como hacen otros microcontroladores. Para el caso de memoria flash, la misma se halla segmentada en bloques de generalmente 512 bytes, la escritura puede hacerse por byte o por word, pero el borrado es por bloque. Generalmente también incluyen dos segmentos adicionales de 128 bytes, destinados al alojamiento de datos de calibración, de modo de poder aprovechar todos los segmentos principales para código y tablas. La operación sobre la flash puede hacerse en cualquier momento, el micro incluye un sistema de autocontención que lo demora si intenta acceder a una posición que está siendo escrita o borrada, por lo que no es estrictamente necesario disponer de código de borrado/escritura en RAM, las rutinas de borrado y grabación pueden residir en la misma flash, con la salvedad de que estén en un segmento diferente al que es objeto de la operación. Actualmente, la flash soporta unos 100.000 ciclos de borrado.

En cuanto a la RAM, siempre comienza en 0200h, aunque la cantidad total depende de cada dispositivo.

Ambos tipos de memoria pueden utilizarse indistintamente para código o datos, y pueden ser accedidas como words o como bytes, siempre respetando la alineación: los bytes pueden estar en direcciones pares o impares; las words sólo pueden residir en locaciones pares. La parte baja (byte menos significativo) de una word está siempre en la dirección par, seguida por la parte alta en la dirección inmediata superior. Por ejemplo, si una word reside en xxx4h, la parte baja está en xxx4h y la parte alta en xxx5h. Una misma variable o espacio puede accederse de una u otra forma, a criterio y conveniencia del programador, siempre que se respete la alineación. Supongamos que en la posición 0204h está guardado el dato 02123h:

MOV 0204h, R5 ; Lee word presente en posición de memoria 0204h y lo guarda en R5: R5=02123h MOV.B 0204h, R6 ; Lee byte presente en posición de memoria 0204h y lo guarda en R6: R6=023h MOV.B 0205h, R7 ; Lee byte presente en posición de memoria 0205h y lo guarda en R7: R7=021h

Leer un byte y leer un word alineado requiere el mismo tiempo de CPU, al contrario de otros micros de 16 o más bits, y por supuesto que los de 8 bits.

Periféricos y SFRs

Los periféricos de 16 bits mapean en el área de 0100h a 01FFh, y deben ser accedidos utilizando instrucciones de acceso por words, es decir, leyendo los 16 bits de una sola vez. Si se intenta accederlos por bytes, solamente se podrán acceder las direcciones pares, el byte más significativo (situado, como dijéramos, en la dirección impar subsiguiente) se leerá como cero.

Los periféricos de 8 bits mapean en el área de 010h a 0FFh, y deben ser accedidos usando acceso por bytes. El uso de direccionamiento por words para acceder a estos dispositivos puede resultar en datos incorrectos en el byte más significativo (dirección impar).

Algunas funciones particulares están configuradas por Registros de Funciones Especiales (SFRs), organizados por bytes en los primeros 16 bytes del espacio de memoria (00 a 0Fh). Deben ser accedidos solamente mediante acceso por bytes. Estos registros controlan la operación y configuración del microcontrolador.

El contenido y variedad de los periféricos, así como la función de los SFRs dependen de cada modelo de microcontrolador en particular. Particularmente, es destacable el hecho de que no sólo hay coincidencias en diversos miembros de una misma familia, sino que por lo general, aún en miembros de distintas familias puede observarse que el mismo periférico suele ocupar la misma posición de memoria y hasta el mismo pin de conexión; contrariamente a muchos otros microcontroladores de otras marcas.

Documentación

La hoja de datos de cada dispositivo o grupo de dispositivos tiene características eléctricas propias del mismo, el seteo de los SFRs que le son propios, y alguna descripción particular de algún periférico que le es propio.

En general, la descripción de cada uno de los periféricos y el seteo de los registros asociados, se encuentra en el manual del usuario de la familia correspondiente, junto con el set de instrucciones y la descripción de la CPU. Esto evita mantener información redundante en las hojas de datos, que resultan simples y concisas en vez de largamente extensas como sucede con otros fabricantes, que incluyen toda la información en cada hoja de datos. La redundancia se encuentra entre familias, es decir, las guías del usuario de diversas familias tienen información redundante entre sí. Por ejemplo, si un desarrollador trabaja con la familia 1xx, como es el caso de quien escribe, le basta con tener la guía o manual del usuario de la familia 1xx y las hojas de datos de cada dispositivo que usa: 11×1, 11×2/12×2, 12x, 13x/14x, por ejemplo.

On atoms and clocks

Interrupts, as their name implies, may interrupt the program flow of a microprocessor at any point, any time, even though sometimes they seem to intentionally do it in the most harmful way possible. The very reason of their existence is to allow the processor to handle events when it is not convenient to be waiting for them to happen or regularly poll for their status, or to handle those types of events requiring a fast and low latency attention.

When carefully utilized, interrupts are a powerful ally, we can even build multiple-task schemes assigning an independent periodic interrupt to each task.

When haphazardly used, interrupts may bring more problems than solutions; in particular, we can’t share variables or subroutines without taking due precautionary measures regarding accessibility, atomicity, and reentrability.

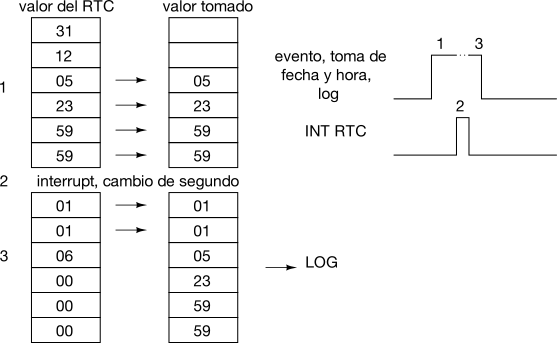

An interrupt may occur at any point in time, and if it occurs in the middle of a multi-byte variable update it can (and in fact it does) wreak havoc on other tasks. These may hold “partially altered” values that in the real world are incorrect values, and probably dangerously incoherent for the software. A typical and frequently forgotten example are timing or date and time variables. The main program happily takes these variables byte by byte in order to show them on the display screen, without thinking that, if they are updated by an interrupt, these are not restricted to being handled before or after our action and not in the middle of it. If those values are only shown at the display screen there is no further inconvenience, correct values will be shown one second later, but if they are logged or reported, we’ll be in serious trouble explaining why there is a record with a date almost a year off, as in the following example:

Some time ago there was this microcontroller-oriented developing environment that in order to “solve this inconvenience” introduced the concept of ‘shared variables’. Every time one of these variables was accessed or changed, the compiler introduced a set of instructions to disable the interrupts during this action and enable them back at the end. The problem I find on these strategies is that this is very bad educationally speaking, as bad as protection diodes in GPIOs (commented in this article): the user does not understand what is going on, does not know, doesn’t learn. Furthermore, frequent accesses to these variables produce frequent interrupt disables, which translate to latency jitter in interrupt handling.

Even though in the case of a multi-byte variable as the one in the example this is quite evident, the same happens with a 32- or 16-bit variable on 8-bit micros that do not have proper instructions to handle 32-bit (most of them) or 16-bit entities. Atomicity, that is, the ability to access the variable as an indivisible unit, is lost, and when accesses to these variables are shared by asynchronous tasks, there is the possibility of superimposing those accesses and then ending with something like what we’ve seen in the example above.

If our C development environment has signal.h, then we should find in there a definition for the sig_atomic_t type, that identifies a variable size that can be accessed atomically. Otherwise, and as a recommendation in these environments, the user must know the processor being used and act accordingly.

In multiprocessing environments there are other issues, that we will not list here.

Corollary

An interrupt may occur at any point in time, as long as it is enabled. The probability of this happening just in the very moment we are accessing a multi-byte variable in a non-atomic way is pretty low, particularly if it is a brief, non frequent access, that is not synchronized to interrupts. However, this probability is greater than zero, and so, given enough repetitions, it is likely to occur. Leaving the correct behavior of a device to the Poisson distribution function should not be considered a good design practice…

This post contains excerpts from the book “El Camino del Conejo“ (The way of the Rabbit), translated with consent and permission from the author.

Latch-up anyone?

(meditations on academically correct content in technical articles and application notes)

Some days ago, I was chatting with an old friend and mentor about electronic design stuff, and he raised the latch-up subject. Later, while I was reviewing an article inside my head (one I haven’t written yet), I recalled a similar example, on a different realm, that brought this to my mind, and so it takes form in this post.

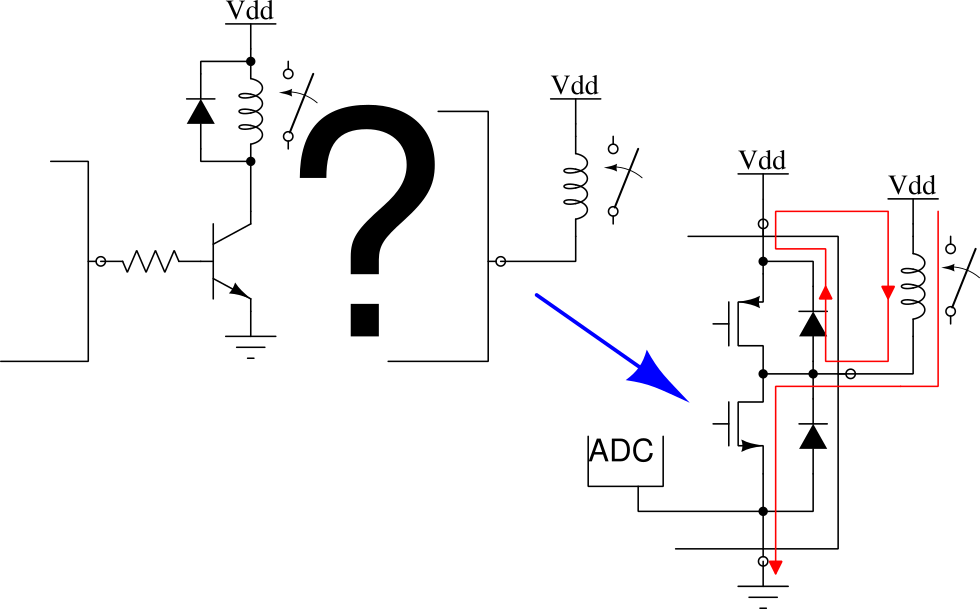

My thoughts were pondering how bad some features and ease of use can be, when we consider teaching and educational aspects. Around 2004, I attended a developer’s conference from a famous company selling “easy” microcontrollers (that are in reality the hardest thing to approach for someone who already knows what a microcontroller is and happens to work on many of them from different brand names). The presenter was showing an application note on which I could see a relay connected to a microcontroller I/O port (GPIO), to which I respectfully questioned if, at least, wasn’t it a bit convenient to add a flywheel (or “protection”) diode in there, and he confidently answered “all our microcontrollers have protection diodes in GPIO pins”.

Let’s start by saying that I’m one of those engineers who don’t use built-in weak pull-ups unless there is a reason to use them. Why? Because I try to keep my design as independent from manufacturer details as possible (in the future I might port this design to a “difficult” microcontroller, not having these cute add-ons), and also firmware independent; saving those tenths of a cent (setting PCB real estate aside) does not justify me being attentive to remembering to enable pull-ups or later find unintended operation up to the point when the code hits that instruction. In addition, in ultra-low power designs those internal weak pull-ups are unpredictable and can even be counterproductive. Furthermore, to anyone not having the design log (if it exists), there is no clue on the “academic” reason why that resistor has to be there, and all it says about the rest of the circuit; to me that is very important in an application note, as it is an engineering document.

Back to this relay being controlled by a GPIO, let’s say this leads to an enthusiast probably learning conflictive techniques that he may later replicate in other realms when he advances (I feel tempted to remind you all of the effects of using only BASIC to teach youngsters how to program in the 80’s).

First issue: the current through the relay. GPIOs usually present a MOS push-pull structure, that is, a P-channel MOSFET sources current from the power supply while a N-channel MOSFET drains current to ground. These MOSFETs usually have different areas, being the N-channel one bigger and so having more current capacity, and in many cases even not enough to drive a relay. Depending on how this relay is connected, it may operate correctly because the GPIO does not raise the output voltage too much, as the N-channel MOSFET is able to handle that current; or it may operate erratically because the GPIO lowers the output voltage too much, as the P-channel MOSFET is not able to handle that current. Other side of this is that, depending on how this relay is connected, its current will flow through the GND pin or the VDD pin, that is, it will enter the microcontroller through the power pin, coming from the power supply, or will return to it through the ground pin, creating, among other things, current circulation in unintended paths, and this can affect operation during relay turn-on and turn-off transients. This can be caused by parasitic inductance in the PCB tracks, and by loop antennas formed by the current flowing on one path and returning on a different path, that irradiate in all directions with an intensity that is proportional to the loop area. The current flowing on the ground pin can also affect measurements on a built-in analog-to-digital converter (the current on the power pin can also do it if the microcontroller saves the analog section power pin, VDDA).

Second issue: the current that should stop flowing through the relay when it opens, but due to it being an inductor, Faraday and Lenz conspire against us: V = -L di/dt.

When we want to open the relay, we need to set the GPIO on a high-impedance state, or at least the opposite state to which we used to turn it on. Let’s suppose we activate it by connecting it to ground, so ideally we should now configure an open-drain pin or at least connect it to the positive power supply. Being the relay coil an inductor, its current can’t stop flowing immediately, it will try to keep flowing. Depending on how hard it is for the inductor “to keep this current constant” (di/dt), it will develop a voltage on its terminals, and this will have the polarity it needs to cause that current to flow, and a value proportional to the inductance. That polarity causes the voltage at the microcontroller pin to be higher than its power supply voltage, as much as it needs in order to cause that current to flow. On which path will this current flow ? Ideally through a flywheel diode, connected in parallel with the relay coil, using the appropriate polarity. Being it absent, through wherever the induced high-voltage may find the path; in this very case, through the protection diode in the GPIO, returning to the relay by the power trace that connects them (in the opposite direction, producing a voltage raise in the microcontroller power supply), if we set the pin in a high-impedance state. If, on the other hand, we set the pin as a positive output, it will have to flow through the P-channel MOSFET, also in the opposite direction, probably through the intrinsic diode that can be found in the crystalline structure, and usually behaves as protection diode; or trough a real protection diode, whatever presents the lower impedance, and following the same path we already mentioned.

Let’s assume those protection diodes are designed to withstand the current flowing through the relay coil, and the rest of the circuit can cope with these effects without issues. What happens if we replicate this design using a microcontroller with no built-in protection diodes ? (These diodes are often undesirable, but this article will become too long…)

Some paragraphs above we said “through wherever the induced high-voltage may find the way”. Sometimes that path can be through some internal peripheral, producing erratic behavior; others, something much more risky can happen.

In semiconductor chip structures, some parasitic PNPN structures can be formed; this is an undesired thyristor. This thyristor may trigger via a trigger current if something is able to inject enough current through the block operating as the gate (depending on polarity); or via overvoltage, if enough voltage develops at the ends of this structure, exceeding its breakdown voltage. This parasitic thyristor then turns on and will stay on until the power supply is removed; most likely the crystalline structure will melt as it isn’t able to dissipate the excess of heat, forming a short circuit. Latch-up.

Therefore, it is often much more educational to control the relay using a transistor and place the necessary flywheel diode, carrying the relay current away from the A/D converter. Otherwise, we can place a comment warning us on the side effects we might have and the components we’ve been able to remove from the circuit thanks to this fancy feature; this might arise enough curiosity on the reader and push him to investigate further.

Even though new fabrication technologies minimize latch-up possibility, it is always convenient to place series resistors on those pins that might be exposed to voltages higher than the power supply voltage, in order to limit a possible trigger current and also help to extinguish current through the thyristor, or at least limit it so it does not destroy the crystalline structure.

TLS, Transport Layer Security

Those who want to connect to a server often require having the possibility to verify it is the desired server and not an impersonator. Those who offer a service might require some tool to authenticate the identity of incoming connections, that is, to determine those really belong to authorized users.

TLS(Transport Layer Security) allows mutually authenticating two parties at a conversation, and keeping its integrity. Authentication is done by means of X.509 certificates, and after that, keys are derived that allow transferred information to be encrypted. This means that whatever is sent can’t be observed with a network sniffer, and the only way to impersonate one of those entities is by having its certificate and the private key from which it’s been derived. TLS is a complex authentication scheme, requiring some certifying authority who generates keys and certificates used in this process. Due to it being a follow up to SSL, it is common to find references to this name.

The simplest form of operation, and maybe the most used, is server authentication; this happens for example when our web browser requests a page in the Internet using HTTPS (HTTP Secure). Before proceeding with the HTTP session, a handshake takes place that allows for validating the authenticity and establishing a secure connection, in the sense that the other end is identified and information integrity is protected. Web browsers know several certification authorities (have their certificates) and can validate a site by checking the signature in their credentials.

The other form of operation takes place in many IoT networks, when a device connects to “the cloud”. This time not only the server certificate is validated, the device certificate is validated too, that is there is a mutual authentication.

The details of this validation process depend on the method both parties agree to use, though roughly it consists in sending some information encrypted with the receiver’s public key, the receiver can only be able to decrypt and answer if he has the corresponding private key.

Even though authentication is a slow process involving Public Key Cryptography operations, the data transfer phase uses symmetric key encryption based on keys derived from the former process.

As we suggested at the beginning of this article, the security in this scheme resides on the impossibility of impersonating the Certification Authority, whose private key must be safely stored and protected from prying eyes.